NVIDIA (driver/CUDA/cuDNN) offline install - 오프라인 분석서버

인터넷이 안되는 offine Linux 환경에서 NVIDIA gpu를 세팅하는 방법

그래도 설치파일 까지는 인터넷이 되는 컴퓨터에서 받아 서버에 파일 반입을 해야한다.

실제 필자의 ubuntu 18.04 os 에 설치된 드라이버를 최신화 하면서 결과들을 기록함

- GPU card : Geforce RTX 2070 SUPER

- NVIDIA-driver : 450.51 –> 460.32

- CUDA-Toolkit : 11.0 –> 11.2

- cuDNN : 8.0 –> 8.1

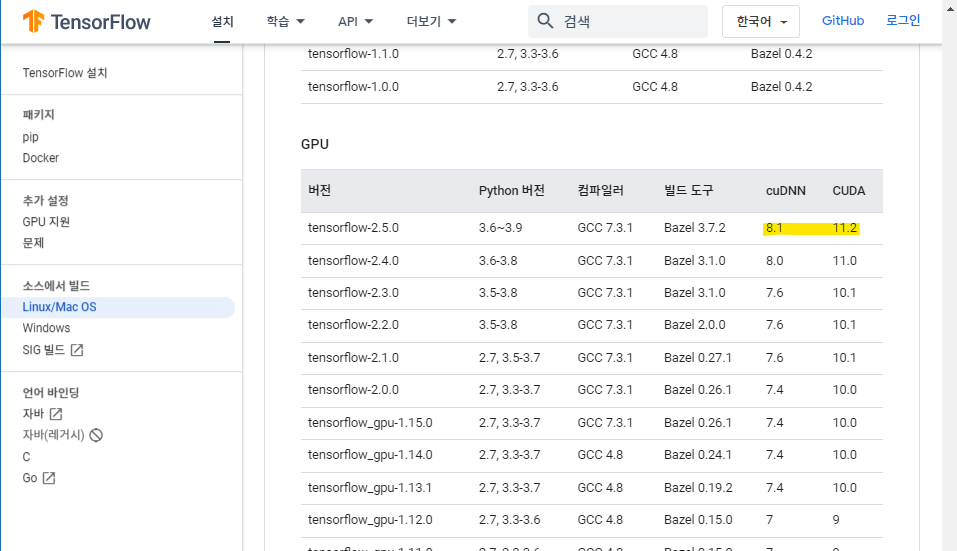

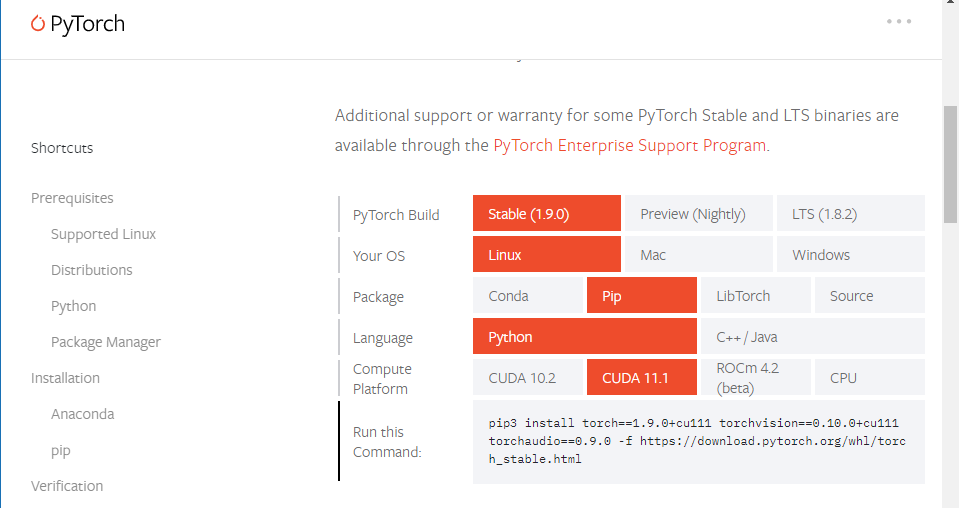

1. TensorFlow & PyTorch

아래 사이트에서 본인이 사용할 패키지 버전을 확인하고 호환되는 CUDA와 cuDNN 버전을 확인한다.

https://www.tensorflow.org/install/source#tested_build_configurations

https://pytorch.org/get-started/locally/

PyTorch는 패키지이름에 +cu111 라고 서있는게 CUDA 11.1 라는 뜻이지만 CUDA 11.2 로도 작동한다.

2. 설치파일 준비

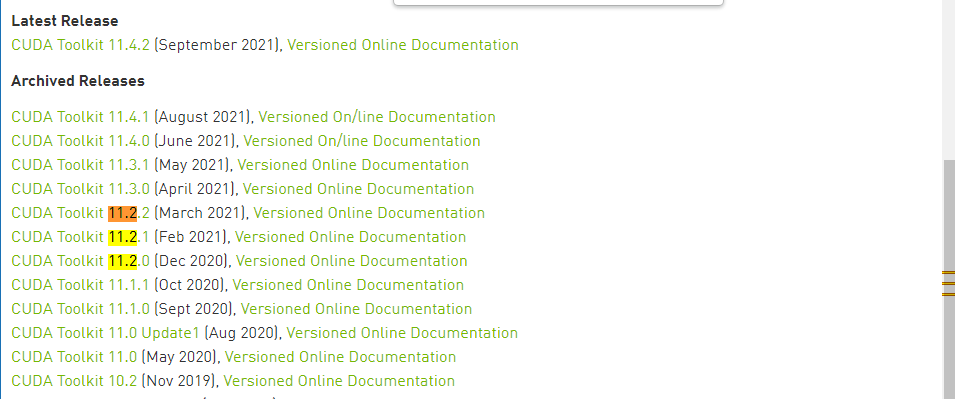

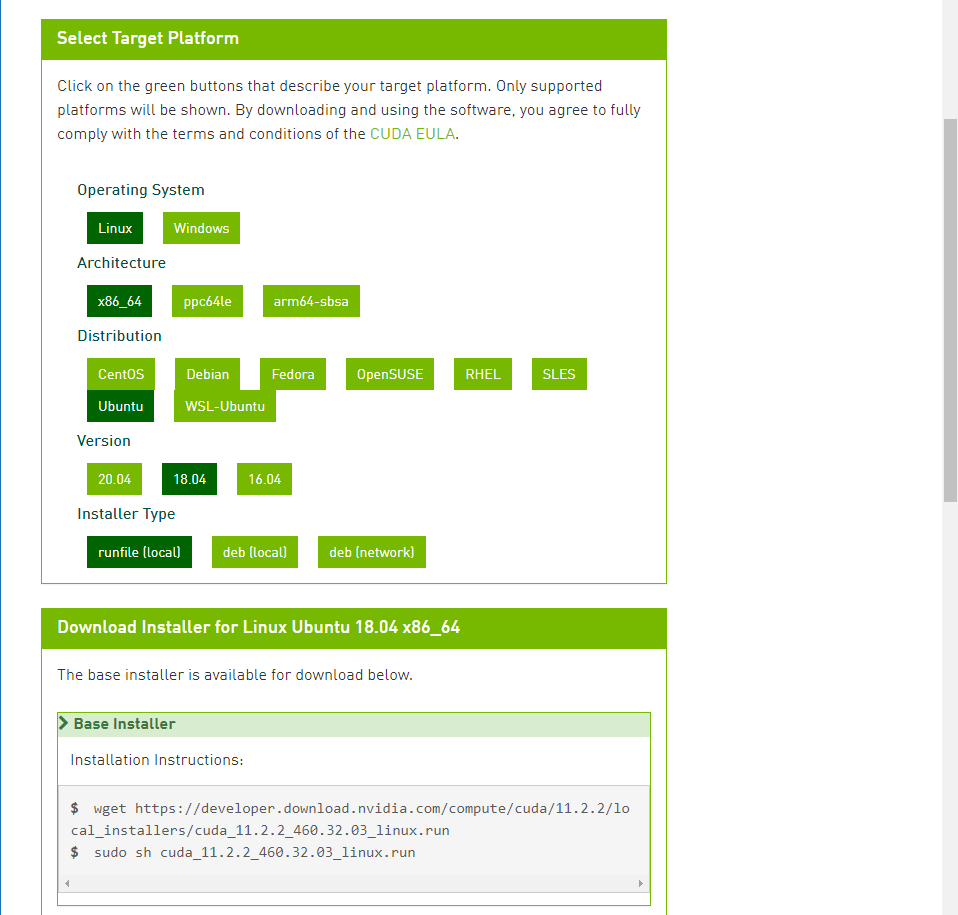

2.1. CUDA Toolkit

아래 사이트에서 본인이 설치할 CUDA 버전을 다운받는다.

major 와 minor 버전만 맞춰주면 세째자리는 높은거 사용해도 된다.

https://developer.nvidia.com/cuda-toolkit-archive

1

$ wget https://developer.download.nvidia.com/compute/cuda/11.2.2/local_installers/cuda_11.2.2_460.32.03_linux.run

인터넷 되는 컴퓨터가 windows면 wget 다음에 나와있는 주소를 브라우저에 복붙하면 다운받아진다.

2.2. NVIDIA Driver

https://www.nvidia.com/Download/index.aspx

Google에 nvidia-driver install 으로 검색하면 일반적으로 위 사이트에서 본인의 그래픽카드를 검색해서 받으라고 한다. 그런데 Nvidia-driver 만 설치할 거라면 상관없지만 우리는 CUDA를 같이 사용해야 하므로 버전을 확인해야 한다.

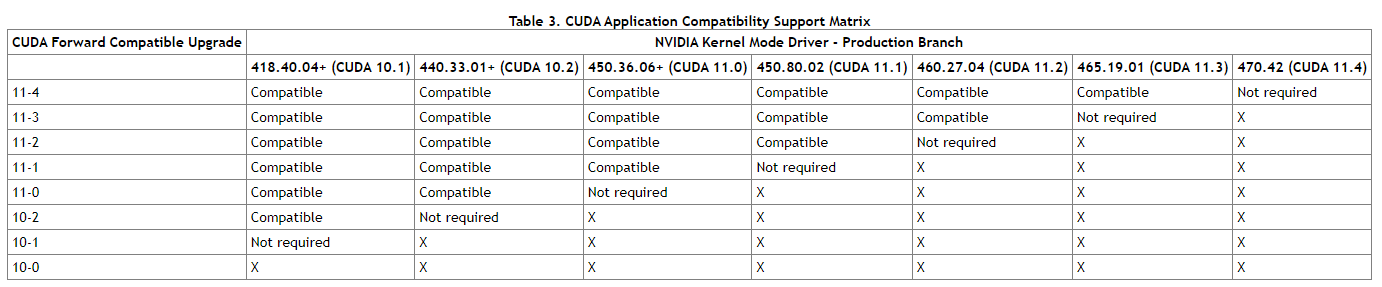

https://docs.nvidia.com/deploy/cuda-compatibility/

https://docs.nvidia.com/deploy/cuda-compatibility/

위 그림에서 보다시피 각 CUDA 버전마다 적절한 NVIDA-driver version이 있다.

- 11.4.x : 470.xx

- 11.3.x : 465.xx

- 11.2.x : 460.xx « 이번에 업데이트할 버전

- 11.1.x : 450.xx

- 11.0.x : 450.xx « 현재 필자의 설치 버전

사실 쉽게 확인하는 방법은 위에서 다운받은 CUDA 파일명에 써있다. cuda_11.2.2_460.32.03_linux.run » 460.32.03

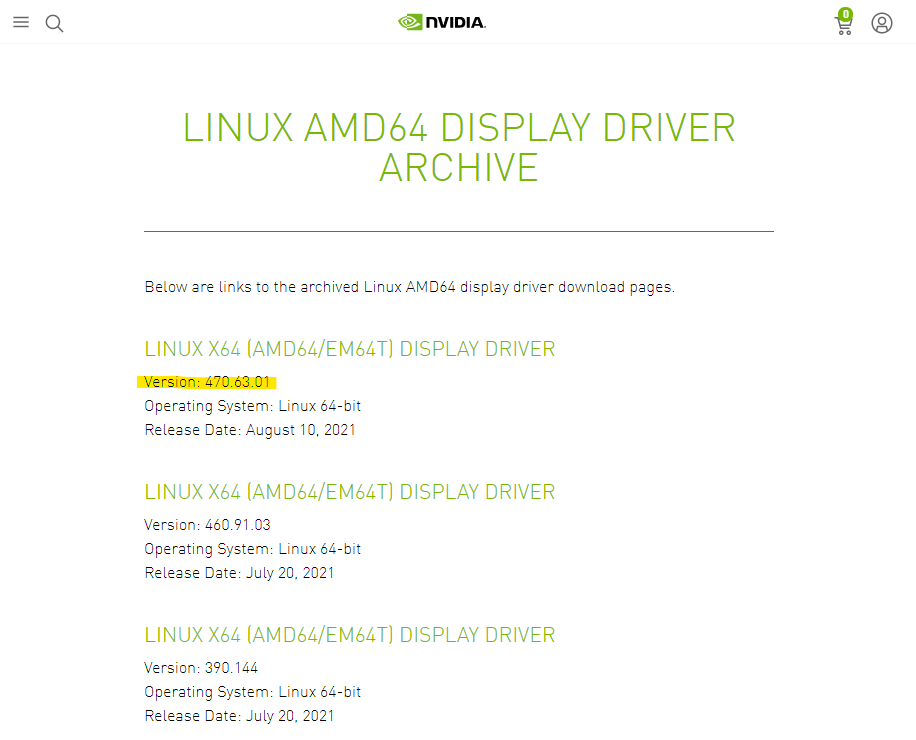

https://www.nvidia.com/en-us/drivers/unix/linux-amd64-display-archive/

위 사이트에서 특정 버전을 검색해서 다운받을 수 있다.

1

$ wget https://us.download.nvidia.com/XFree86/Linux-x86_64/460.32.03/NVIDIA-Linux-x86_64-460.32.03.run

2.3. cuDNN

https://developer.nvidia.com/rdp/cudnn-archive

위 사이트에서 본인이 설치할 cuDNN 버전을 설치한다. major 와 minor 버전만 맞춰주면 세째자리는 높은거 사용해도 된다.

cuDNN을 설치하려면 nvidia 회원가입이 필요하다.

3. 설치 Install

위에서 준비한 파일 3개를 offline server 에 반입한다.

- NVIDIA-Linux-x86_64-460.32.03.run

- cuda_11.2.2_460.32.03_linux.run

- cudnn-11.2-linux-x64-v8.1.1.33.tgz

3.1. NVIDIA Driver

3.1.1. X-server 종료

NVIDIA-driver 또는 CUDA-Toolkit 을 설치할 때 X-server가 떠있으면 설치가 진행되지 않는다.

nvidia-docker 로 실행되고 있는 컨테이너도 종료해야 한다.

즉, gpu에 메모리가 안잡혀있어야 한다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

$ nvidia-smi

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 450.51 Driver Version: 450.51 CUDA Version: 11.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 GeForce RTX 207... Off | 00000000:07:00.0 Off | N/A |

| 0% 48C P8 32W / 215W | 16MiB / 7979MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 1632 G /usr/lib/xorg/Xorg 16MiB |

+-----------------------------------------------------------------------------+

1

2

$ systemctl stop gdm3

# or 본인 명령어에 맞게

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

$ nvidia-smi

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 450.51 Driver Version: 450.51 CUDA Version: 11.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 GeForce RTX 207... Off | 00000000:07:00.0 Off | N/A |

| 33% 48C P0 38W / 215W | 0MiB / 7979MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

3.1.2. NVIDIA-driver 설치 진행

기존 드라이버가 있다고 하더라도 uninstall 하고 설치할 필요는 없다.

1

$ sh NVIDIA-Linux-x86_64-460.32.03.run

Uncompressing NVIDIA Accelerated Graphics Driver for Linux-x86_64 460.32.03……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

There appears to already be a driver installed on your system (version: 450.51). As part of installing this driver (version: 460.32.03), the existing driver will be uninstalled. Are you sure you want to continue?

yes

he distribution-provided pre-install script failed! Are you sure you want to continue?

yes

Would you like to register the kernel module sources with DKMS? This will allow DKMS to automatically build a new module, if you install a different kernel later.

yes

Install NVIDIA’s 32-bit compatibility libraries?

yes

Would you like to run the nvidia-xconfig utility to automatically update your X configuration file so that the NVIDIA X driver will be used when you restart X? Any pre-existing X configuration file will be backed up.

yes

Your X configuration file has been successfully updated. Installation of the NVIDIA Accelerated Graphics Driver for Linux-x86_64 (version: 460.32.03) is now complete.

yes

3.1.3. NVIDIA-driver 설치 완료

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

$ nvidia-smi

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 460.32.03 Driver Version: 460.32.03 CUDA Version: 11.4 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... Off | 00000000:07:00.0 Off | N/A |

| 30% 43C P0 44W / 215W | 0MiB / 7979MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

3.2. CUDA Toolkit

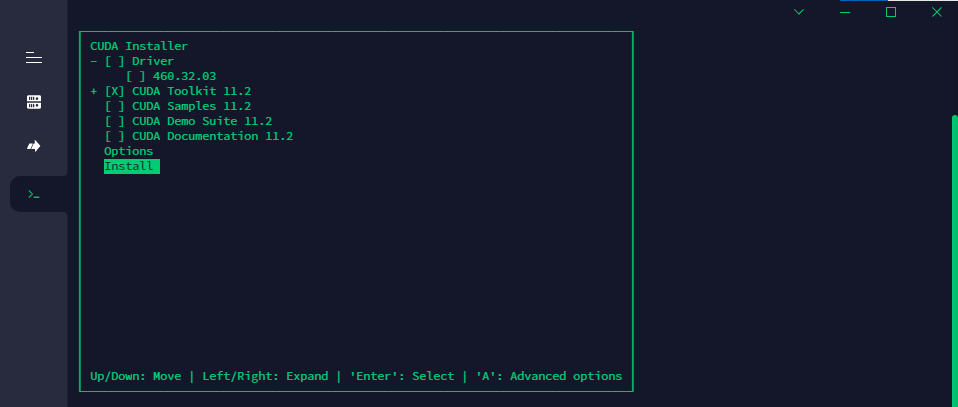

3.2.1. CUDA 설치

1

$ sh cuda_11.2.2_460.32.03_linux.run

Existing package manager installation of the driver found. It is strongly recommended that you remove this before continuing.

Continue

Do you accept the above EULA? (accept/decline/quit):

accept

[X] CUDA Toolkit 11.2

A symlink already exists at /usr/local/cuda. Update to this installation?

yes

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

===========

= Summary =

===========

Driver: Not Selected

Toolkit: Installed in /usr/local/cuda-11.2/

Samples: Not Selected

Please make sure that

- PATH includes /usr/local/cuda-11.2/bin

- LD_LIBRARY_PATH includes /usr/local/cuda-11.2/lib64, or, add /usr/local/cuda-11.2/lib64 to /etc/ld.so.conf and run ldconfig as root

To uninstall the CUDA Toolkit, run cuda-uninstaller in /usr/local/cuda-11.2/bin

***WARNING: Incomplete installation! This installation did not install the CUDA Driver. A driver of version at least 460.00 is required for CUDA 11.2 functionality to work.

To install the driver using this installer, run the following command, replacing <CudaInstaller> with the name of this run file:

sudo <CudaInstaller>.run --silent --driver

3.3. cuDNN

3.3.1. cuDNN 파일 압축 해제 및 복사

1

2

3

4

5

6

7

8

9

$ tar -zxvf cudnn-11.2-linux-x64-v8.1.1.33.tgz

$ cd cuda

$ cp include/cudnn* /usr/local/cuda-11.2/include/

$ cp lib64/libcudnn* /usr/local/cuda-11.2/lib64/

$ chmod a+r /usr/local/cuda-11.2/lib64/libcudnn*

3.3.2. cuDNN 설치 확인

1

$ cat /usr/local/cuda-11.2/include/cudnn_version.h | grep CUDNN_MAJOR -A 2

1

2

3

4

5

6

7

#define CUDNN_MAJOR 8

#define CUDNN_MINOR 1

#define CUDNN_PATCHLEVEL 1

--

#define CUDNN_VERSION (CUDNN_MAJOR * 1000 + CUDNN_MINOR * 100 + CUDNN_PATCHLEVEL)

#endif /* CUDNN_VERSION_H */

3.3.3. path 등록

1

$ vim ~/.bashrc

Please make sure that 에 나와있는 것처럼 제일 아래 3줄 추가

1

2

3

export PATH=/usr/local/cuda-11.2/bin:$PATH

export LD_LIBRARY_PATH=/usr/local/cuda-11.2/lib64:$LD_LIBRARY_PATH

export LD_LIBRARY_PATH=/usr/local/cuda-11.2/extras/CUPTI/lib64:$LD_LIBRARY_PATH

1

2

$ source ~/.bashrc

$ nvcc -V

1

2

3

4

5

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2021 NVIDIA Corporation

Built on Sun_Feb_14_21:12:58_PST_2021

Cuda compilation tools, release 11.2, V11.2.152

Build cuda_11.2.r11.2/compiler.29618528_0

4. TensorFlow & PyTorch 에서 확인하기

4.1. Tensorflow

1

2

import tensorflow as tf

print("GPU 사용 가능 여부 :", tf.config.list_physical_devices('GPU'))

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

2021-09-17 22:00:49.154339: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcuda.so.1

2021-09-17 22:00:49.642161: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2021-09-17 22:00:49.642588: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1733] Found device 0 with properties:

pciBusID: 0000:07:00.0 name: GeForce RTX 2070 SUPER computeCapability: 7.5

coreClock: 1.815GHz coreCount: 40 deviceMemorySize: 7.79GiB deviceMemoryBandwidth: 417.29GiB/s

2021-09-17 22:00:49.642609: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcudart.so.11.0

2021-09-17 22:00:49.644067: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcublas.so.11

2021-09-17 22:00:49.644101: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcublasLt.so.11

2021-09-17 22:00:49.644726: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcufft.so.10

2021-09-17 22:00:49.644863: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcurand.so.10

2021-09-17 22:00:49.645373: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcusolver.so.11

2021-09-17 22:00:49.645722: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcusparse.so.11

2021-09-17 22:00:49.645804: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcudnn.so.8

2021-09-17 22:00:49.645901: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2021-09-17 22:00:49.646354: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2021-09-17 22:00:49.646730: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1871] Adding visible gpu devices: 0

GPU 사용 가능 여부 : [PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')]

1

2

from tensorflow.python.client import device_lib

print(device_lib.list_local_devices())

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

2021-09-17 22:01:37.775630: I tensorflow/core/platform/cpu_feature_guard.cc:142] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2021-09-17 22:01:37.776500: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2021-09-17 22:01:37.777294: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1733] Found device 0 with properties:

pciBusID: 0000:07:00.0 name: GeForce RTX 2070 SUPER computeCapability: 7.5

coreClock: 1.815GHz coreCount: 40 deviceMemorySize: 7.79GiB deviceMemoryBandwidth: 417.29GiB/s

2021-09-17 22:01:37.777409: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2021-09-17 22:01:37.778199: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2021-09-17 22:01:37.778914: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1871] Adding visible gpu devices: 0

2021-09-17 22:01:37.778968: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcudart.so.11.0

2021-09-17 22:01:38.264309: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1258] Device interconnect StreamExecutor with strength 1 edge matrix:

2021-09-17 22:01:38.264346: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1264] 0

2021-09-17 22:01:38.264352: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1277] 0: N

2021-09-17 22:01:38.264523: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2021-09-17 22:01:38.264961: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2021-09-17 22:01:38.265374: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2021-09-17 22:01:38.265758: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1418] Created TensorFlow device (/device:GPU:0 with 6673 MB memory) -> physical GPU (device: 0, name: GeForce RTX 2070 SUPER, pci bus id: 0000:07:00.0, compute capability: 7.5)

[name: "/device:CPU:0"

device_type: "CPU"

memory_limit: 268435456

locality {

}

incarnation: 1892938855603084678

, name: "/device:GPU:0"

device_type: "GPU"

memory_limit: 6997999616

locality {

bus_id: 1

links {

}

}

incarnation: 6822426675334327757

physical_device_desc: "device: 0, name: GeForce RTX 2070 SUPER, pci bus id: 0000:07:00.0, compute capability: 7.5"

]

4.2. PyTorch

1

2

3

4

5

6

7

8

9

10

In [1]: import torch

In [2]: torch.cuda.is_available()

Out[2]: True

In [3]: torch.cuda.device_count()

Out[3]: 1

In [4]: torch.cuda.get_device_name(0)

Out[4]: 'GeForce RTX 2070 SUPER'